A man, a camera, a pi, and 80 awkward portrait photos of 5 work colleagues: an insight to building our new smart office assistant using facial recognition.

The idea for this R&D project started one sunny day in our Glasgow studio, when we were looking for one of our directors. We knew he was in, but just couldn’t figure out where, at least not without opening the solid doors to every meeting room. So, we decided to build a piece of software to track him!

We have toyed with the idea of building our own smart office assistant for some time now and had already put together some R&D prototypes e.g. a voice skill for checking meeting room availability called Jarvis, and our coffee rota dashboard. So, we thought, why not build on top of those, and call it Osborne.

Making Osborne!

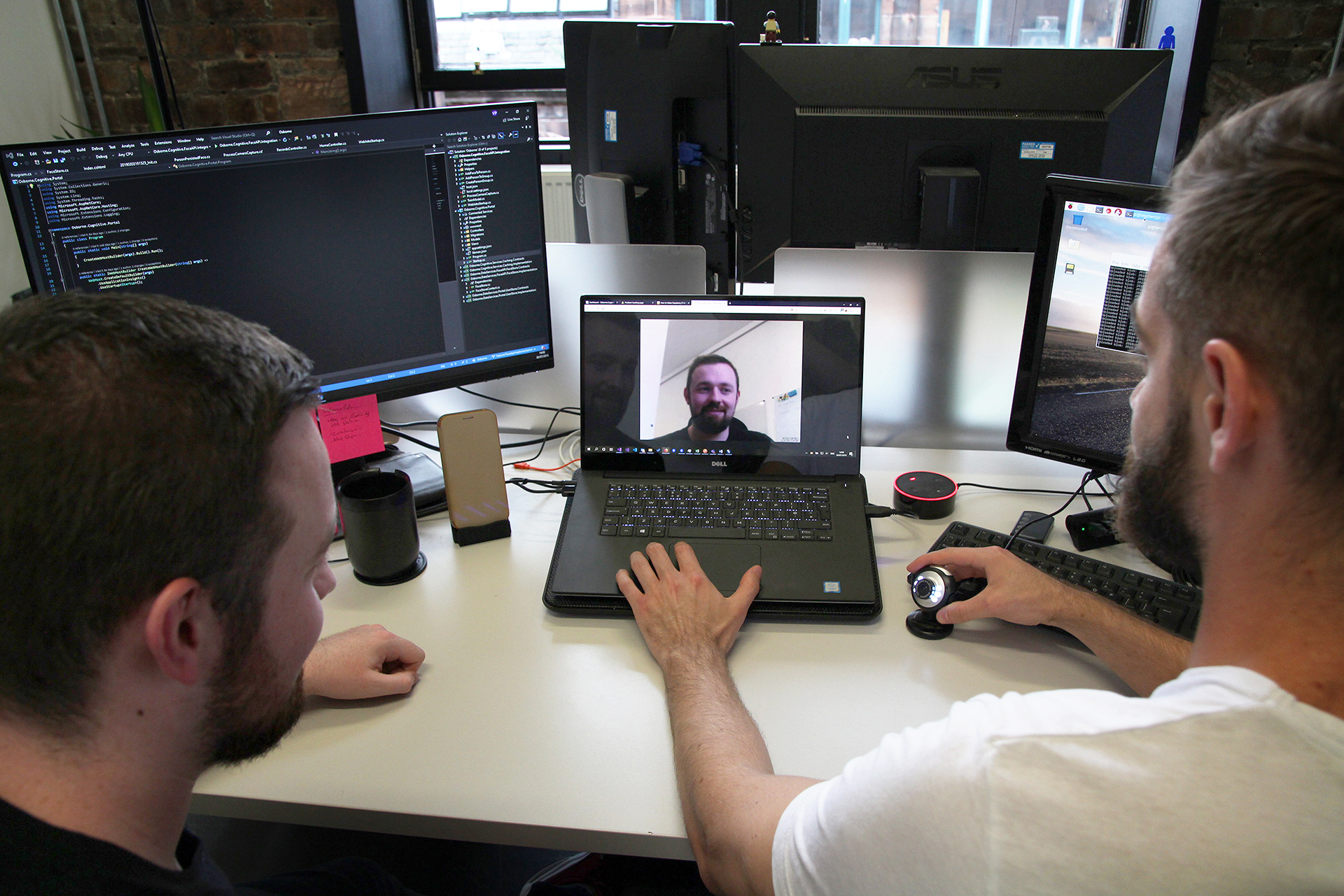

As a first phase of work on our little assistant, we aimed to have a proof of concept that would basically show how we could monitor and display the status of a meeting room and its participants. Using a webcam we would take pictures based on motion detection and, making use of Microsoft’s Cognitive Services (more precisely, the Face API we could detect who is in the room then display the participants on the dashboard to those outside the room, scared to peek in.

We split the functionality into three steps:

- Live data collection

- Processing the data into information, and persisting it

- Summarizing the information into a shape that can be consumed as knowledge

Off the back of those steps, we planned and created a backlog for the project to help define what we wanted to achieve and how we wanted to achieve it.

Face API pre-requisites

Since we were about to use Cognitive Services’ Face API, we started by setting up our account and exploring the API concepts.

Face API works with the following concepts, in hierarchical order: Person Groups, Persons, and Faces.

A Person Group is the model that Osborne scrubs photos against to verify any faces it recgonises. We gave the group a name and an ID then started creating persons within it. The Persons are individual entities with a name and an auto-generated unique id. For our PoC we defined 5 people within 1 person group and , for each person in each group, a set of portrait images containing their faces needed to tell Osborne “the face in this picture is this person”.

Once pictures were added to all Persons in the group, we began training the model, i.e. we told the service to go away, look at all the pictures for each person, and understand those people’s defining face characteristics. With this task successfully completed, Osborne was able to recognize any one of the 5 people from any picture with their face on it.

All of these processes were completed using the available .Net SDK for Face API installed in a group of azure functions, which made it easier to add more person groups, persons, and faces to persons in future. We also set up an SQL Db where we stored the details of the locations where we would set up the cameras, the person groups, and the people we’ve registered within our person group, e.g. their name, person id as defined by the Face API, and other metadata about the person that we would want to hold.

Live data collection

The setup for this needed to be quite simple and provide mobility, so we chose to go with a regular webcam connected to a Raspberry Pi Zero W. This provided a small sized setup that didn’t need a lot of wiring, while also covering all the technical needs for this step.

In terms of data flow, the camera would take snapshots based on a motion detection trigger using ‘Motion’ from the Motion-Project, as it was the most maintained and popular choice of software for this use case, while also being Linux compatible and easy to integrate with a usb connected camera. This allowed us to save pictures on the raspberry pi, which would pick them up as they came in and upload them to cloud storage in an Azure Blob container.

“The configuration of ‘Motion’ presented some challenges as it allowed for a lot of tweaking, threshold changing and customisation. Although the documentation is quite comprehensive, getting something that worked for our scenario ended up being based on a lot of trial and error.”

On top of the motion detection software, this step needed two more bits of functionality on the raspberry pi: a script that took the pictures and uploaded them to Blob storage, and a cron triggered script that would clean up the images from the raspberry pi storage to avoid running out of space.

Processing data and persisting the resulting information

This stage of the PoC did pretty much all the heavy lifting and was made up of services hosted on Microsoft’s cloud platform, Azure.

To kick things off, we had a blob triggered function in a .Net Core v2 Azure Function App which was triggered every time a new item was added to our blob storage container; in our case, photos from the webcam.

Once the image was extracted from the blob, it passed on to an endpoint of the Face API to find out if there were any faces in the picture. If there were, it extracted the face’s details e.g. the position of each face in the picture (since it can deal with group pictures as well), and each face’s characteristics as well as some optional attributes such as age, emotion analysis, and if the person is wearing makeup or not.

Each face’s details, detected in the picture, were passed on to another endpoint of the Face API to check against our Person Group model, asking it ‘Hey, are any of those faces belonging to people you know about?’. If the person was recognized to be part of the person group, then the API would return the person’s id. At that point, we would collate this information with the details previously retrieved and create a log entry in the database for that location, with a timestamp. Even if the face was not recognised as part of the person group, we would still create a log for it, but assign an “Unknown” name to the person while populating all the other attribute fields (emotion, age, etc).

At the same time as creating the log in the SQL Database, we updated objects in an Azure Redis Cache, as the information in the cache would be polled by the public dashboard further down the line. The information here was held under a key, representing the location; similar to how in the SQL Db we had a table that stored location logs (e.g. “meeting room X, meeting room Y”).

Summarizing the information to be consumed as knowledge

To create a presentation layer for the information gathered, we chose to create a simple .Net Core Web App, hosted on an Azure App Service instance, running a Basic App Service Plan. We picked the Basic plan as this allows for the “Always On” feature to be used, something we felt this PoC needed for live usage and demo purposes. And, by using a Basic plan the costs we incurred were not too big.

With this being a PoC, the focus was more on displaying the information in a way that is easily digestible, rather than making a fully interactive dashboard. So, with this in mind, a simple Web API endpoint that returned the data to be displayed in a table on a razor page was enough to meet our needs. Furthermore, as we only used the data for the previous half an hour, our storage service was set up to purge unused images when it’s called, to make sure it’s not holding any useless or dirty data.

Challenges

There are still some hurdles we’ll need to overcome in future updates to Osborne. At present the motion software we’re running on the raspberry pi requires a lot of pictures for every motion event, some of which may not contain someone’s face. This puts an unnecessary load on the Function app, and can at times max out the usage quota per minute for the free tier of Face API. While this didn’t impact the success of the POC, it’s a learning point we’ll take on and improve in future iterations.

Results

Our resulting PoC displays the results of the facial recognition service in near-real time via web page. While Osborne is still in its infancy and can only identify a handful of people, we’re pleased to have developed such a successful working prototype, and are eager to expand Osbourne further.

Beyond our own curiosity to see how far we can take our new smart office assistant, working with emerging technologies such as AI and cognitive services lets us explore use cases and figure out the best applications of the technology across industries. Not only that, it helps build up our own expertise to use in future client projects.

Head back to our Lab to read more about our explorations into emerging technology and best practice development, from voice to artificial intelligence. If you’re looking to know more about where cognitive services can fit into your digital strategy, get in touch, we’d love to chat!